The 2025 *News Integrity in AI Assistants* study analysed responses from four AI assistants across 18 countries and four languages, testing over 3,000 answers to real-world news queries.

The findings highlight significant risks to public trust, particularly among younger audiences, who increasingly rely on AI for accessing news.

According to the report, 45 % of AI-generated answers contained accuracy issues, while 31 % had sourcing problems. In many cases, AI assistants provided flawed or misleading information, with 20 % of responses deemed completely unreliable.

‘This research conclusively shows that these failings are not isolated incidents,’ said EBU Media Director and Deputy Director General Jean Philip De Tender. ‘They are systemic, cross-border, and multilingual, and we believe this endangers public trust. When people don’t know what to trust, they end up trusting nothing at all, and that can deter democratic participation.’

The intensive international study of unprecedented scope and scale was launched at the EBU News Assembly, in Naples on the 22nd of October. Involving 22 public service media (PSM) organizations working in 14 languages, it identified multiple systemic issues across four leading AI tools.

Professional journalists from participating PSM evaluated more than 3,000 responses from ChatGPT, Copilot, Gemini, and Perplexity against key criteria, including accuracy, sourcing, distinguishing opinion from fact, and providing context.

“The AI assistants they tested consistently churned out garbled facts, fabricated or misattributed quotes, decontextualised information or paraphrased reporting with no acknowledgement” write EBU Director of News, Liz Corbin, and Vincent Peyrègne, CEO of WAN-IFRA in an Op-Ed regarding the research.

The study found that AI assistants often failed to properly attribute sources, making it difficult for users to verify the origin or credibility of the information provided.

The 2025 Reuters Institute *Digital News Report* quantified the trend: The weekly use of generative AI systems specifically for getting news doubled from 3% to 6% across the six surveyed countries (Argentina, Denmark, France, Japan, the UK, and the US) between 2024 and 2025.

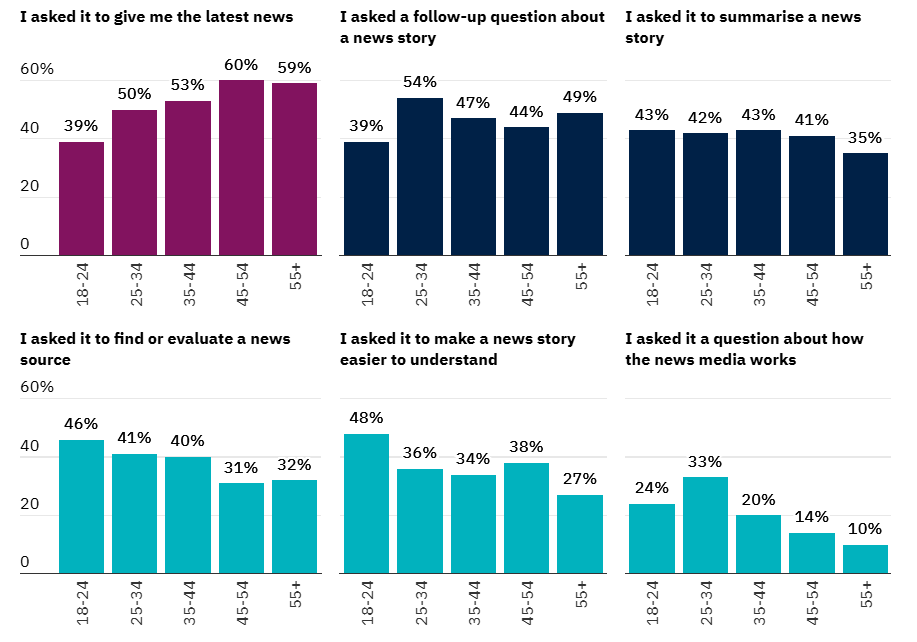

Younger users (18–24s) are more likely to use AI to help them understand the news, with 48% using it to make a story easier to comprehend, compared to 27% of those 55 and over

The 18–24 age group has the highest weekly use of generative AI for news, at 8%. This is significantly higher than the 5% weekly usage reported for those aged 55 and over.

Peter Archer, BBC Programme Director, Generative AI, said: ‘We’re excited about AI and how it can help us bring even more value to audiences. But people must be able to trust what they read, watch and see. Despite some improvements, it’s clear that there are still significant issues with these assistants. We want these tools to succeed and are open to working with AI companies to deliver for audiences and wider society.”

The EBU and BBC are urging tech companies, regulators, and news organizations to collaborate on stricter standards for AI-generated news content. The study recommends:

— improved sourcing transparency in AI responses

— better training data to reduce misinformation

— clear labelling of AI-generated content

— ongoing auditing of AI tools by independent bodies

AI assistants tested in English, French, German, and Spanish all exhibited similar issues, suggesting that the problem is not language-specific but inherent to current AI architectures.

Information-seeking is the primary use-case for generative AI overall (at 24% weekly usage), making news consumption (6%) a specialized and limited application.

Download the full report here

Image: made in Canva.com