Six European countries—Spain, France, Portugal, Denmark, Greece, and the Netherlands—are now actively examining or developing policies that would limit young people's use of social media services. Additionaly, the United Kingdom, is examining harm prevention measures and age assurance for porn under an Online Safety Act (enforced 2025). The initiative follows Australia's recent decision to prohibit social media access for children under 16, a ban that affects platforms including Instagram, Facebook, Snapchat, X (formerly Twitter), TikTok, and YouTube.

Spanish Prime Minister Pedro Sánchez has emerged as one of the most outspoken proponents of restrictions, advocating for sweeping prohibitions. His position led to a public exchange with Elon Musk, owner of X and CEO of several technology companies.

"Social media has become a failed state,” Prime Minister Pedro Sánchez said in a speech in Dubai last week. "I know that it will not be easy. Social media companies are wealthier and more powerful than many nations, including mine. But their might and power should not scare us.”

Musk responded with a statement on X: "Dirty Sánchez is a tyrant and traitor to the people of Spain.”

Right after, Greece announced it was “very close” to introducing a ban on social media use for minors under the age of 15, according to Reuters. The Ministry of Digital Governance is prepared to move forward with the measure, seeing in the Kids Wallet app, launched last year, a tool to enforce the ban.

Policymakers cite concerns about exposure to harmful content, the addictive nature of platform design, extensive data collection from young users, and evidence suggesting adverse psychological effects. Recent incidents—including the generation of non-consensual sexualised images by Musk's Grok AI system—have reinforced regulators' determination to act.

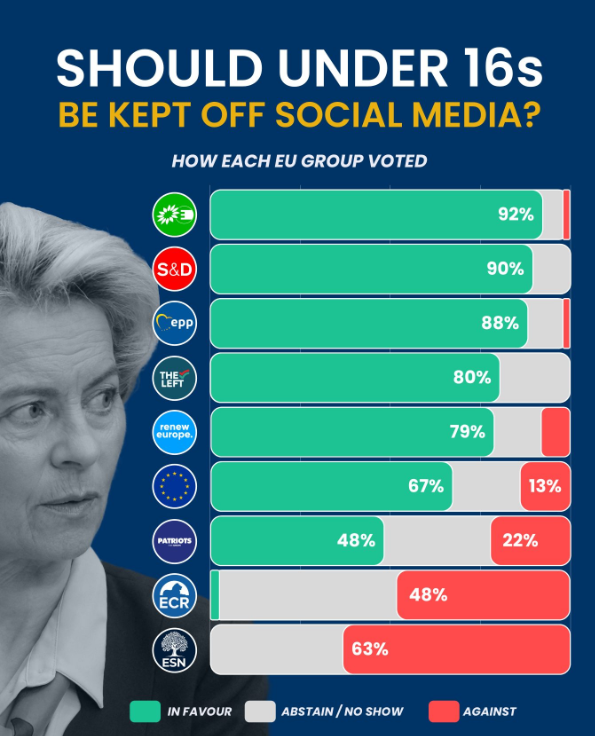

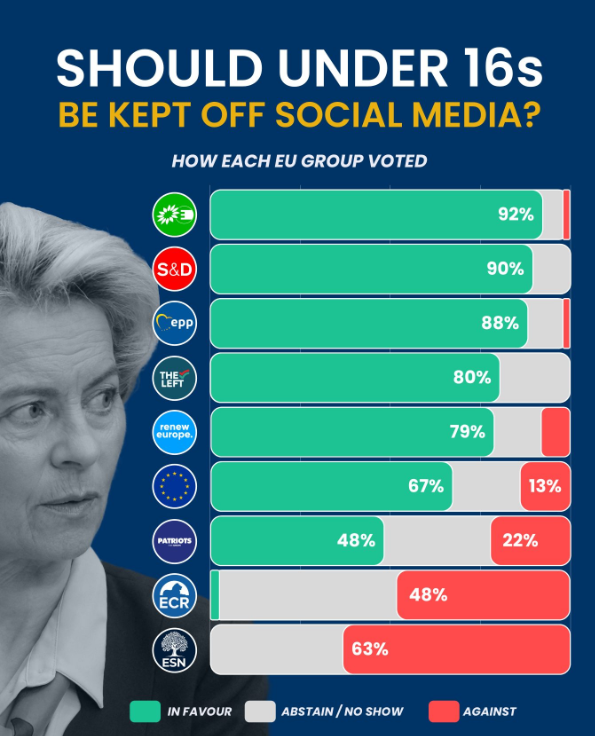

In November, the European Parliament voted in favour of such measures in large numbers:

• A unified 16+ digital age limit for social media, with parental consent possible from age 13.

• Stronger DSA enforcement, especially against addictive design like infinite scroll and autoplay.

• A call for personal liability for tech CEOs who repeatedly fail to protect minors.

Implementation of such bans poses substantial technical and practical hurdles. Age verification systems remain imperfect, while virtual private networks could enable users to bypass geographic restrictions. Regulators also face the risk that restrictions might drive young people toward platforms with even less oversight.

Objections and criticism

Privacy organizations like the Electronic Frontier Foundation (EFF) criticized these approaches, arguing that technological age verification solutions fundamentally compromise user anonymity, disproportionately impact marginalized communities, and fail to address underlying digital safety challenges. Instead, the EFF proposes that age verification should be replaced with robust platform design that prioritizes user safety through intelligent content moderation and sophisticated algorithmic protections. Social media and online platforms must invest in creating inherently safer digital environments that protect users without compromising their privacy or autonomy.

The Commission made a blueprint for an age verification solution available earlier in the summer, and Greece has been implementing a technical solution to test it. It allows users to prove they are over 18 without sharing any other personal information. According to EU Commission announcements, apart from being privacy-preserving, the solution will be user-friendly and fully interoperable with future EU Digital Identity Wallets. It can also be easily adapted to prove other age ranges, for example, 13+.

The Psychological Society of Ireland said the primary responsibility for protecting children from harm by digital devices must lie with the creators of those technologies not the users. “A public health approach must incorporate a specific focus on the responsibilities of social media companies to ensure that their platforms are safe for children and young people” it said, underlying the risk from shifting responsibility away from the platforms that design and profit from the problems they create. “There is also a risk that overly restrictive approaches may push vulnerable young people towards less visible and less regulated online spaces, while focusing on symptoms rather than the underlying causes of distress” said professor John Canavan, Director of the UNESCO Child and Family Research Centre, University of Galway.

The regulatory push carries significant commercial stakes. Europe represents a major market for social media companies, with revenue from the region continuing to grow. Measures that block access to younger users could alter the long-term business models of these platforms and reshape their strategies for European markets. Despite the challenges, officials in the affected countries appear resolved to pursue protective measures for young users in what they describe as increasingly problematic digital spaces.

Image under a CC04 licence in Google